Critics Cite Potential Discrimination, Invasion of Privacy from AI Use

Citing potential harm to job applicants due to “affect recognition,” the AI Now Institute at New York University issued a report calling for an end to automated analysis of facial expressions in hiring and other major decisions, according to a Reuters story. In addition, the nonprofit Electronic Privacy Information Center has filed a complaint about HireVue to the U.S. Federal Trade Commission.

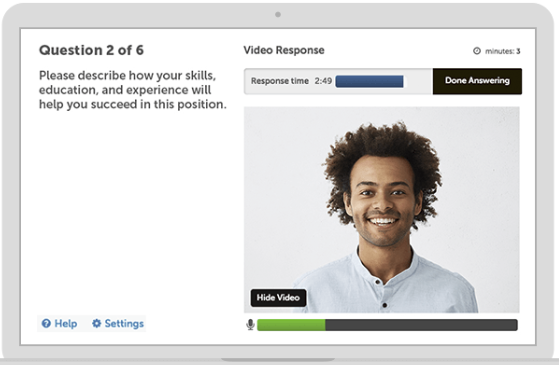

AI Now Institute said HireVue, which has sold systems for remote video interviews to more than 100 companies, including Hilton and Unilever, uses a system of analyzing facial movements, tone of voice and speech patterns that might harm job interviewees unnecessarily.

“How people communicate anger, disgust, fear, happiness, sadness, and surprise varies substantially across cultures, situations, and even across people within a single situation,” wrote a team at Northeastern University and Massachusetts General Hospital in the report.

Companies including Microsoft Corp are marketing their ability to classify emotions using software, the study said. Also, Amazon.com Inc offers analysis on expressions of emotion through its Rekognition software.

Fortune magazine quoted Frida Polli, chief executive officer of Pymetrics, an A.I. company that helps employers remove bias from the hiring process, in her highly critical analysis of the flawed algorithms in use.

“Can you imagine if all the toddlers in the world were raised by 20-year-old men?” she said. “That’s what our A.I. looks like today. It’s being built by a very homogenous group.”

A story on vox.com said lawyers at the Electronic Privacy Information Center (EPIC), a privacy rights nonprofit, filed a complaint Wednesday with the Federal Trade Commission, to investigate HireVue for potential bias, inaccuracy, and lack of transparency. It also accused the company of engaging in “deceptive trade practices” because the company claims it doesn’t use facial recognition.

The lawsuit follows the introduction of the Algorithmic Accountability Act in Congress earlier this year, which would grant the FTC authority to create regulations to check so-called “automated decision systems” for bias. The Equal Opportunity Employment Commission (EEOC), the federal agency that deals with employment discrimination, is already investigating two discrimination complaints.

The problem with a lack of oversight of AI use in hiring and other facets of life also extends to Europe, according to a story on the Financial Times website.

Image from HireVue website.

Even though the EU has strict laws against discrimination, according to new research from the Oxford Internet Institute, algorithms are drawing inferences about sensitive personal traits such as ethnicity, gender, sexual orientation and religious beliefs based on our browsing behavior.

Sandra Wachter, the academic behind the study, said these traits are used by online advertisers to either target or exclude certain groups from products and services, or to offer them different prices.

Under current data protection regulation, it is illegal for advertisers to target groups of people based on sensitive “special category” information. However, other groups created by algorithms based on apparently neutral characteristics such as “reader of Cosmopolitan magazine” or “interested in Bollywood films” — which may map closely on to related personal categories such as gender and ethnicity — fall outside of this legal framework.

Ironically, India is proposing a new law to regulate use of private data, but which would give the government access to it. Some say it would set a dangerous precedent that could lead to abuse.

Privacy advocates attacked the personal data protection bill, introduced last week, because it would give any government agency the ability to bypass personal-data safeguards on grounds like national security.

“This latest bill is a dramatic step backward in terms of the exceptions it grants,” internet company Mozilla told ft.com.

Leave A Comment