Researchers Use Tremendous Amounts of Energy to Train AI Models

In many industries, Artificial Intelligence enables companies to improve energy efficiency, streamline operations and put robots in jobs that are physically damaging to workers’ bodies. In spite of the positive aspects of employing AI to solve problems, an MIT study found that it creates one enormous one: a staggering carbon footprint.

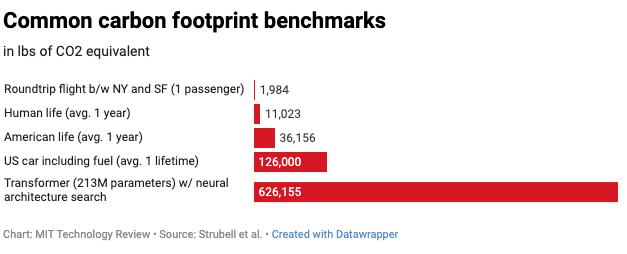

According to a story on MIT’s technologyreview.com website, training a single AI model can generate as much carbon as five cars over their lifetimes of use. The researchers found that the training process creates more than 626,000 pounds of carbon dioxide equivalent, according to the research paper on arxiv.org.

“While probably many of us have thought of this in an abstract, vague level, the figures really show the magnitude of the problem,” says Carlos Gómez-Rodríguez, a computer scientist at the University of A Coruña in Spain, who was not involved in the research. “Neither I nor other researchers I’ve discussed them with thought the environmental impact was that substantial,” she said to technologyreview.com.

One of the most energy-sucking examples is training Natural Language Processing, or NLP, according to the study. It involves machine translation, sentence completion, and other benchmarking tasks. OpenAI, for instance, created its GPT-2 model, which writes convincing fake news articles. Using the voluminous data sets scraped from the internet used up much of the power.

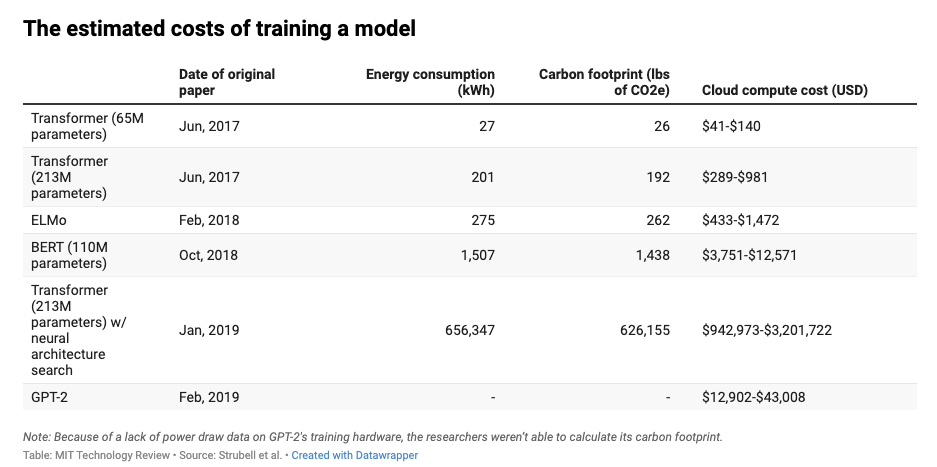

The researchers looked at four models in the field that have been responsible for the biggest leaps in performance: the Transformer, ELMo, BERT, and GPT-2. They trained each on a single GPU for up to a day to measure its power draw. They then used the number of training hours listed in the model’s original papers to calculate the total energy consumed over the complete training process. That number was converted into pounds of carbon dioxide equivalent based on the average energy mix in the US, which closely matches the energy mix used by Amazon’s AWS, the largest cloud services provider.

Even simple NLP training models demands a great amount of energy. Building one “paper-worthy” model required researchers to work with 4,789 models over a six-month period. Converted to CO2 equivalent, that’s more than 78,000 pounds emitted, which would be representative of standard work efforts in the field.

The authors of the study say they hope it will spur AI developers to develop more efficient hardware and algorithms, so they can cut back on carbon emissions.

Leave A Comment