Convolutional Neural Networks Capable of Seeing Emotions on Partial Faces

Imagine playing a virtual reality video game in which the characters react to the player’s racing pulse, increasing the action or egging them on to fight the virtual dragon.

It will soon be a “real” reality.

Researchers at Yonsei University and Motion Device Inc. proposed a deep-learning-based technique that could enable emotion recognition during VR gaming experiences, presenting their white paper at the 2019 IEEE Conference on Virtual Reality and 3-D User Interfaces.

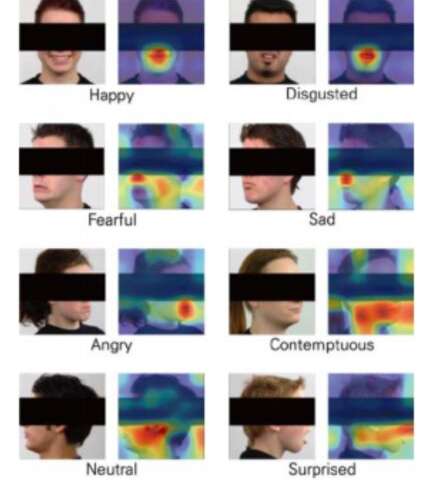

The researchers tested the idea of tracking gamer’s emotions while they are wearing VR headgear that covers eyes, eyebrows and ears by training convolutional neural networks (CNNs) to estimate emotions from the images of a face wearing a Head-Mounted Display (HMD) by hiding that part of the face from the existing face-emotion dataset. Their analysis showed that it worked.

The researchers used three convolutional neural networks (CNNs)—called DenseNet, ResNet and Inception-ResNet-V2—to predict people’s emotions from partial images of faces. They took images from what’s termed the “Radbound Faces Dataset” (RaFD), with 8,040 face images of 67 subjects, then edited them by covering the part of the face that would be covered by the HMD while playing VR games.

The DenseNet CNN performed better than the other networks, with an average of 90% accuracy, but the ResNet algorithm outperformed the other two in identifying expressions of fear and disgust.

“We successfully trained three CNN architectures that estimate the emotions from the partially covered human face images,” the researchers wrote in their paper. “Our study showed the possibility of estimating emotions from images of humans wearing HMDs using machine vision.”

The breakthrough may lead to even more engaging, interactive virtual reality experiences as the algorithms gauge user’s reactions and respond to them. While the study was tailored for gaming, other applications could be developed. For instance, students who are training for driving and flying may get additional support according to their reactions. Classes on any subject could be more finely tuned for students, too, according to their reactions, helping AI to become better at teaching.

read more at m.techxplore.com

Leave A Comment