MIT Algorithm Views Videos, Extrapolates Speakers’ Likeness

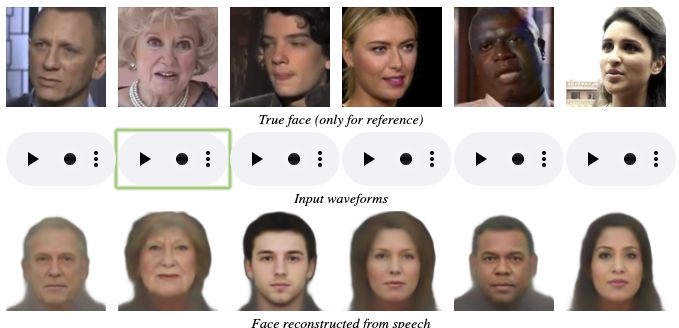

A study published at Cornell University’s database on computer science, arXiv.org, describes how the “Speech2Face” algorithm has created images of people based solely on the sound of their voices. Developed at the Massachusetts Institute of Technology, the AI draws from what it learned from watching millions of videos on the internet and YouTube to arrive at its images.

The researchers provided details of the Speech2Face program and its development on Github. Researchers presented the paper at the IEEE Conference on Computer Vision and Pattern Recognition this year. The authors write:

“Note that some of the features in our predicted faces may not even be physically connected to speech, for example hair color or style. However, if many speakers in the training set who speak in a similar way (e.g., in the same language) also share some common visual traits (e.g., a common hair color or style), then those visual traits may show up in the predictions.”

A story in Futurism.com marveled at the new technology, stating: “In practice, the Speech2Face algorithm seems to have an uncanny knack for spitting out rough likenesses of people based on nothing but their speaking voices.” It noted the author’s caution that it is a “purely academic exploration” and that the researchers are sensitive to the potential misuse of the technology.

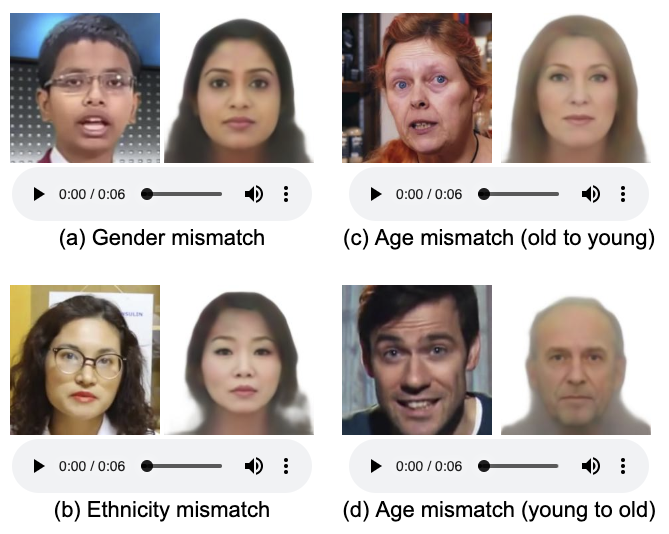

Almost as fascinating as the accurate depictions are the inaccurate ones in the graphic below:

Speech2Face failures

The authors of the paper explained the mistakes as being linked to variations on the norm in terms of speech. For instance, a high-pitched male voice or a child’s voice may trick the algorithm into predicting a facial image with female features. Sometimes the spoken language does not match ethnicity. Also, age mismatches can occur if a voice sounds “younger” than the speaker’s age.

Leave A Comment