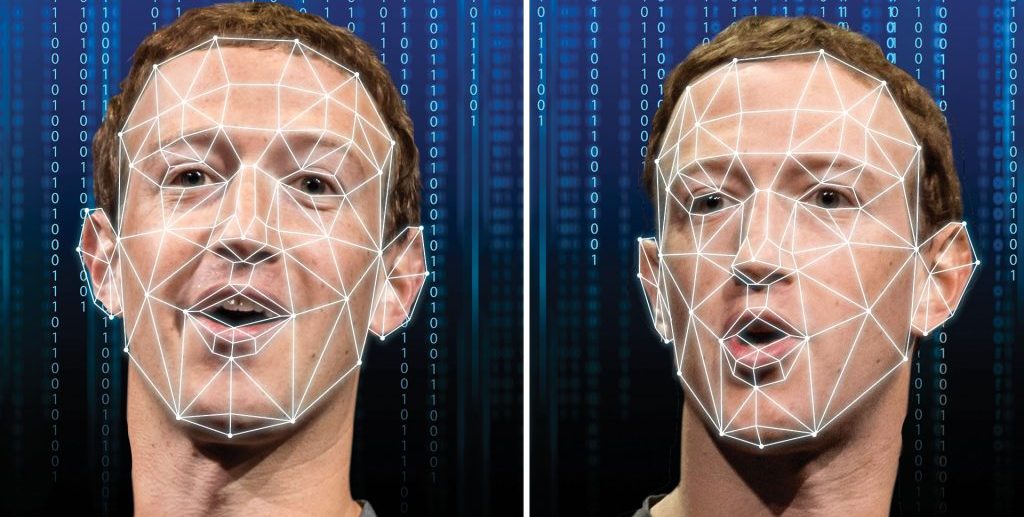

Illustration of analysis of Facebook CEO Mark Zuckerberg’s face. (credit: analyticsinsight.net)

Warning to Businesses: Deepfakes Can Involve Audio Files, Too

A story in Analytics Insight magazine outlines how deepfakes could lead to a massive breach in big data, but not how most people would think.

The use of fabricated audio files could mislead businesses into releasing information, money or access to bad actors who imitate CEOs and other executives’ voices with their technology, according to the story. Already, cybercriminals copied a CEO’s voice to demand an urgent “cash-transfer.”

According to a story first reported in The Wall Street Journal, the voice deepfake resulted in a loss of $243,000 from a UK energy company’s bank account.

Fabricating a phone call or voice message⏤a type of social engineering technique known as vishing⏤could give cybercriminals dangerous access to corporate databases or accounts.

“The CEO of a U.K.-based energy firm thought he was speaking on the phone with his boss, the chief executive of the firm’s German parent company, who asked him to send the funds to a Hungarian supplier,” according to the WSJ story. “The caller said the request was urgent, directing the executive to pay within an hour, according to the company’s insurance firm, Euler Hermes Group SA.”

A story on threatpost.com explored the issue and found that it could merely be the beginning of a new wave of cybercrimes.

“In the identity-verification industry, we’re seeing more and more artificial intelligence-based identity fraud than ever before,” David Thomas, CEO of identity verification company Evident, told Threatpost. “As a business, it’s no longer enough to just trust that someone is who they say they are. Individuals and businesses are just now beginning to understand how important identity verification is. Especially in the new era of deep fakes, it’s no longer just enough to trust a phone call or a video file.”

According to analyticsinsight.net, using the VPN of a reliable company could enable businesses to avoid such failures because VPNs mask identity and prevent hackers from interfering with communications.

In addition, while “manipulated audio files might sound the same to the average human listener,” AI cybersecurity programs can detect variations in pitch and speed, and pick our real from fake audios.

As the article reports, “more and more people are starting to educate themselves about the deepfake tech, and the implications the technology has on misinformation.”

Every opportunity should be taken to ensure that information sent and received is legitimate, and using AI-based programs is one of the easiest ways to do that, according to the article.

Leave A Comment