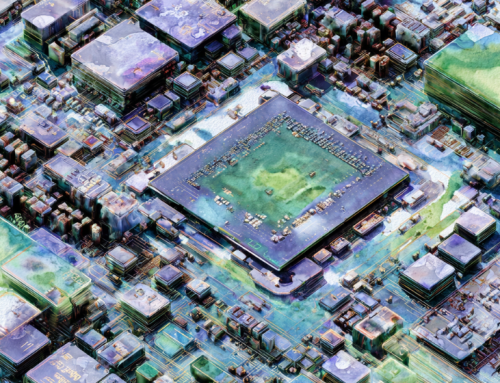

ChatGPT, Google Bard, and other AI chatbots simulate intelligence using massive language models powered by pattern prediction—not actual understanding—making them both powerful and deeply flawed tools in the digital age. (Source: Image by RR)

Large Language Models Rely on Word Prediction, Not True Understanding

Large language models (LLMs) like ChatGPT and Google Bard are rapidly transforming how we interact with digital information, boasting capabilities from web searching to composing creative literature. These AI chatbots are based on LLMs that use vast datasets to simulate natural language responses, making them feel intuitive and intelligent. However, despite their impressive functionality, they don’t “understand” language or meaning in a human sense. Rather, they predict word sequences based on patterns in the text they were trained on, functioning much like an advanced version of autocorrect.

These models, as noted in wired.com, are primarily built on neural networks known as transformers, which include a key feature called “self-attention.” This mechanism allows LLMs to weigh the importance of each word in a sentence relative to the others, enabling a more nuanced understanding of context. That said, their reliance on statistical likelihood rather than factual accuracy means they can—and often do—make mistakes, especially when asked for precise or lesser-known information. They may even generate hallucinations: convincing yet false outputs that seem believable.

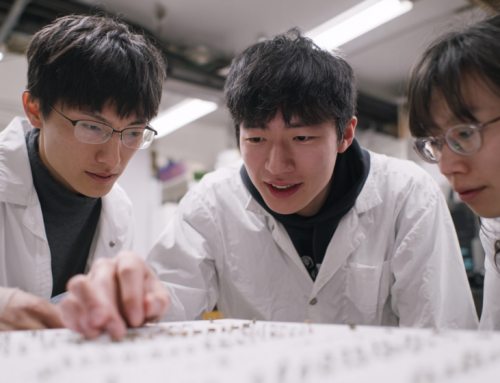

A major contributor to LLM effectiveness is human involvement through Reinforcement Learning from Human Feedback (RLHF). This process helps refine model performance by guiding the AI on preferred responses, ranking answer quality, and flagging errors. While LLMs grow increasingly complex—GPT-4, for example, reportedly uses around one trillion parameters—they still tend to produce generic, average, or cliché responses. Their strength lies in pattern recognition, not genuine reasoning or creativity.

Ultimately, LLMs excel at sounding informed by mimicking existing text but fall short when asked to deliver nuanced or original insights. Their responses often reflect the biases and data quality of their training sources, and their core function remains rooted in word prediction. As these models evolve, users must understand their limitations as much as their strengths. The promise of AI is immense, but so is the need for critical engagement with how it’s built, trained, and deployed.

read more at wired.com

Leave A Comment