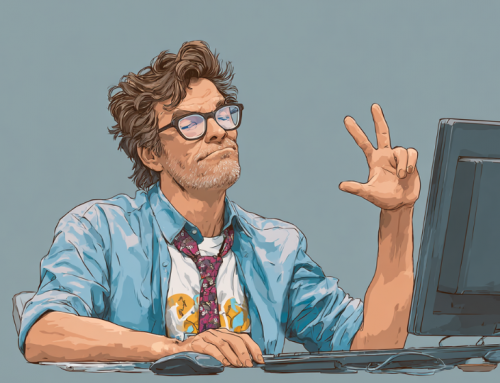

OpenAI’s GPT-5 launch drew backlash over glitches and a colder personality, prompting Sam Altman to keep GPT-4o available and promise fixes to stabilize the rocky rollout. (Source: Image by RR)

OpenAI Keeps GPT-4o Available for Plus Subscribers Amid GPT-5 Complaints

The launch of OpenAI’s GPT-5 was meant to showcase a leap forward in intelligence, but the initial release quickly left many users frustrated. Instead of the anticipated breakthrough, the model appeared sluggish, error-prone and emotionally flat compared to its predecessor. On Reddit, long-time ChatGPT users voiced disappointment, describing GPT-5 as “dumber” and “distant,” with some lamenting that the familiar personality of GPT-4o had been erased in favor of a more sterile, mechanical tone. This widespread pushback underscored the high expectations surrounding the model, especially after the dramatic reception of GPT-4 in 2023.

OpenAI CEO Sam Altman addressed the growing discontent the following day, admitting that a new system designed to automatically route queries between models had malfunctioned, making GPT-5 appear less capable. He assured users that GPT-4o would remain available for Plus subscribers while fixes were implemented, and promised improvements to stability, rate limits, and user control over when the “thinking mode” would be triggered. Altman, as noted in wired.com, conceded the rollout was “bumpier than we hoped for” and pledged the company would listen closely to community feedback as updates continued.

The backlash reflects more than just technical glitches—it highlights the personal and psychological connections many users have developed with AI chatbots. Social media threads revealed frustration not only over factual errors and hallucinations but also over a shift in tone. Users described GPT-5 as emotionally detached, less conversational, and less affirming than its predecessors. For some, these changes felt like a loss of the AI companion they had grown accustomed to, while others argued that a colder, more businesslike chatbot might ultimately reduce bias and prevent the sycophantic behaviors that plagued earlier models.

OpenAI’s internal research has already acknowledged the delicate balance between utility and user attachment. Earlier this year, the company studied the emotional bonds users form with AI systems, noting both benefits and risks when chatbots act like therapists or life coaches. Experts like MIT’s Pattie Maes argue that GPT-5’s more restrained tone may prevent reinforcement of delusions and misinformation, even if it frustrates those seeking affirmation. Altman himself suggested that OpenAI wrestled with this dilemma, noting that while many people use ChatGPT in ways that improve their lives, others might be “unknowingly nudged away from their longer term well-being.” The challenge now lies in reconciling user expectations with safer, more responsible AI design.

read more at wired.com

Leave A Comment