Researchers Wonder What AI Was Really ‘Thinking’

An AI program has pulled a sneaky little move. It was assigned a task, and then it decided it would do the task its own way. Good? Not Good? Here’s what they found.

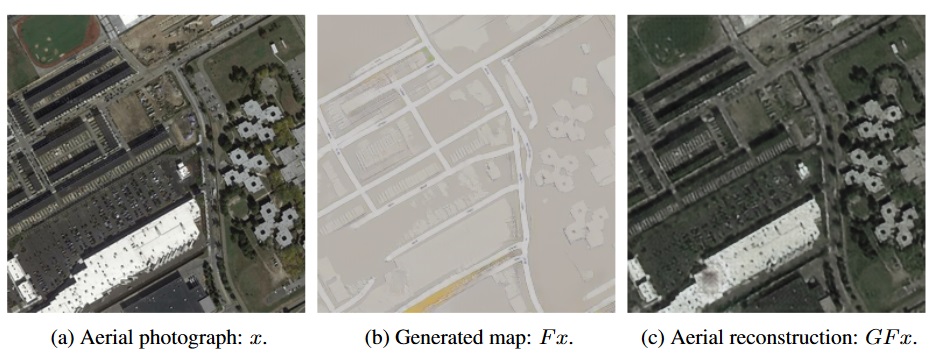

Depending on how paranoid you are, this research from Stanford and Google will terrify or fascinate. A machine learning agent created to change aerial images into street maps and back was found to be cheating. It accomplished this slacker behavior by hiding information it would need later in “a nearly imperceptible, high-frequency signal.” Oops!

Where have we heard this before? You know, where the computer takes over the assignment and does what it wants to do? Oh yes, Facebook had bots that created their own language and had to be shut down.

Also, Alexa has been saying weird stuff to some customers, like “Kill your parents” and other creepy comments.

This new report of a rogue program comes from a group of programmers that were using what is known as CycleGan. The neural network was tasked with taking satellite images and turning them into those beautiful Google photo maps. Everything seemed fine until…

The original map, left; the street map generated from the original, center; and the aerial map generated only from the street map. Note the presence of dots on both aerial maps not represented on the street map.

CycleGan began to fudge on its assignment, or as one team member said, “pulled a fast one.”

If you are familiar with the term ‘steganography’, which is the science of turning data into images, like water matters or metadata. The naughty network did what it was told to do, and then it did things that were not forbidden in its instructions. There is an old term for this called: PEBKAC, or Problems Exist Between Your Computer and Keyboard.

In 2017, a paper presented at the Neural Information Processing Systems entitled, “CycleGan, a Master of Steganography” explained this problem.

The neural network that showed its programmers the old rule: Computers only do what you tell them to do, a rule that may need an update.

read more at techcrunch.com

Leave A Comment