DARPA Seeks Performance Gains by Tailoring Chip Architecture

DARPA announced this month that it seeks to pursue two projects to create improved chips for use in AI applications. As Moore’s Law reaches a deceleration and eventual plateau in traditional computing computer, governments and companies will need to research novel ways of optimizing performance with custom-tailored chip architectures.

While quantum computing is advancing and may obviate the need for advances in silicon-based traditional transistors, quantum computers are not ready for large-scale adoption and DARPA’s proposals mirror commercial efforts to push traditional chips to their limits in order to support advancements in AI.

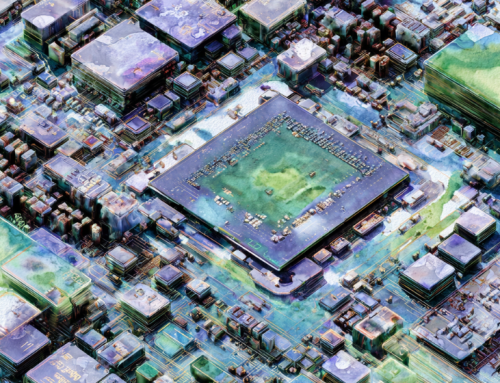

For 50 years, a foundational principle behind the development of microprocessors in computer chips has been Moore’s Law. This law is an observation made by Intel co-founder Gordon Moore back in the 1960s, which assumes that the number of transistors in an integrated circuit doubles roughly every 18 months — initially, 24 months — effectively increasing microchip complexity. The problem is, Moore’s Law is nearing its end, as transistors can no longer be effectively miniaturized to increase chip performance.

This predicament, coupled with the need for higher levels of processing power, presents a hurdle that must be overcome in the continued development of artificial intelligence (AI). The Defense Advanced Research Projects Agency (DARPA), the research arm of the U.S. Defense Department, thinks that developing specialized circuits — or application-specific integrated circuit chips (ASICs) — is one of the ways to overcome these limitations. On Wednesday last week, DARPA announced a couple of efforts working on this concept, as part of their Electronics Resurgence Initiative.

One project is the Software Defined Hardware, which is developing “a hardware/software system that allows data-intensive algorithms to run at near ASIC efficiency without the cost, development time or single application limitations associated with ASIC development.” The second project is called Domain-Specific System on a Chip. Simply put, this takes a combined approach of using general purpose chips, hardware coprocessors, and ASICs, “into easily programmed [systems on a chip] for applications within specific technology domains.”

Read more: DARPA: We Need a New Microchip Technology to Sustain Advances in AI